scrape webpages with xpath and python

This page is mainly about scrape webpages with xpath and python

We are going to use PythonA programming language invented to teach programming but now widely used in education, industry and research. and XPathA query language for selecting nodes from an XML document., a syntax for defining parts of an XML document, to access a webpage, locate a piece of data on the page, retrieve the data and display it.

Python needs two libraries to be installed...

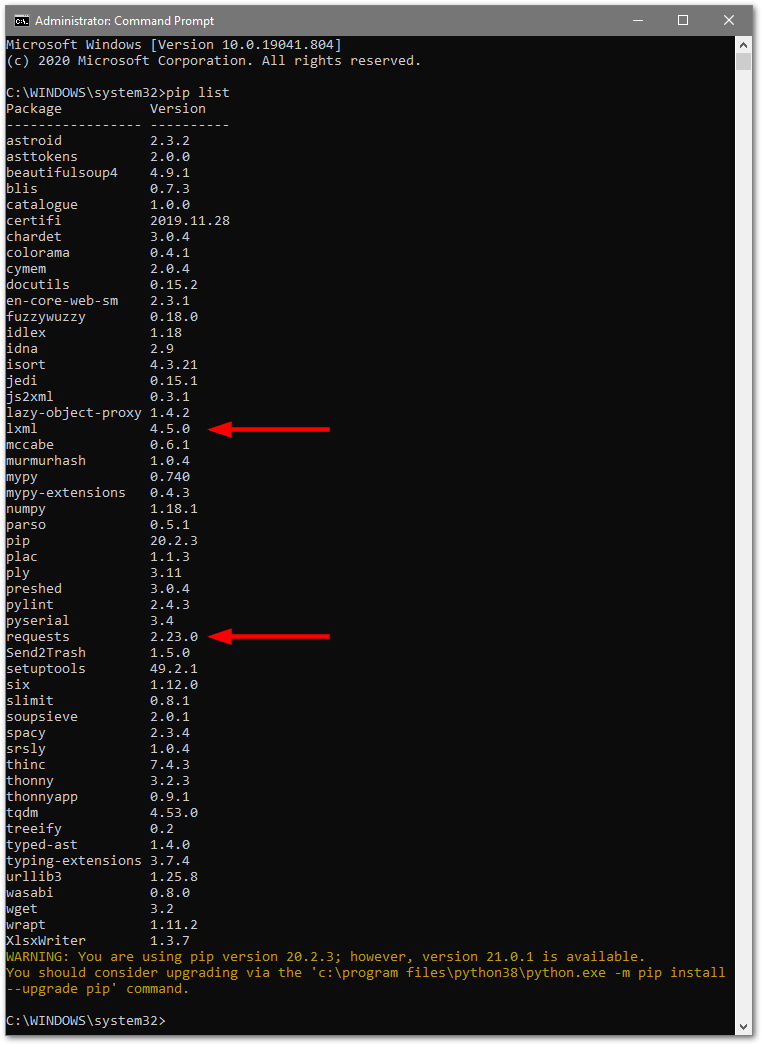

First, let's check whether they are installed. I'm working on a Windows machine.

1

Start an administrator command prompt

Click start and type

cmd2

List all the installed Python packages

At the prompt, type

pip list

Oooo what a lot of packages

3

Install the required Python packages if necessary

If you can't see

lxmlrequests> pip install lxml

> pip install requests

Everything should end normally with a confirmation message saying that this and that have been installed. Check again using

pip list4

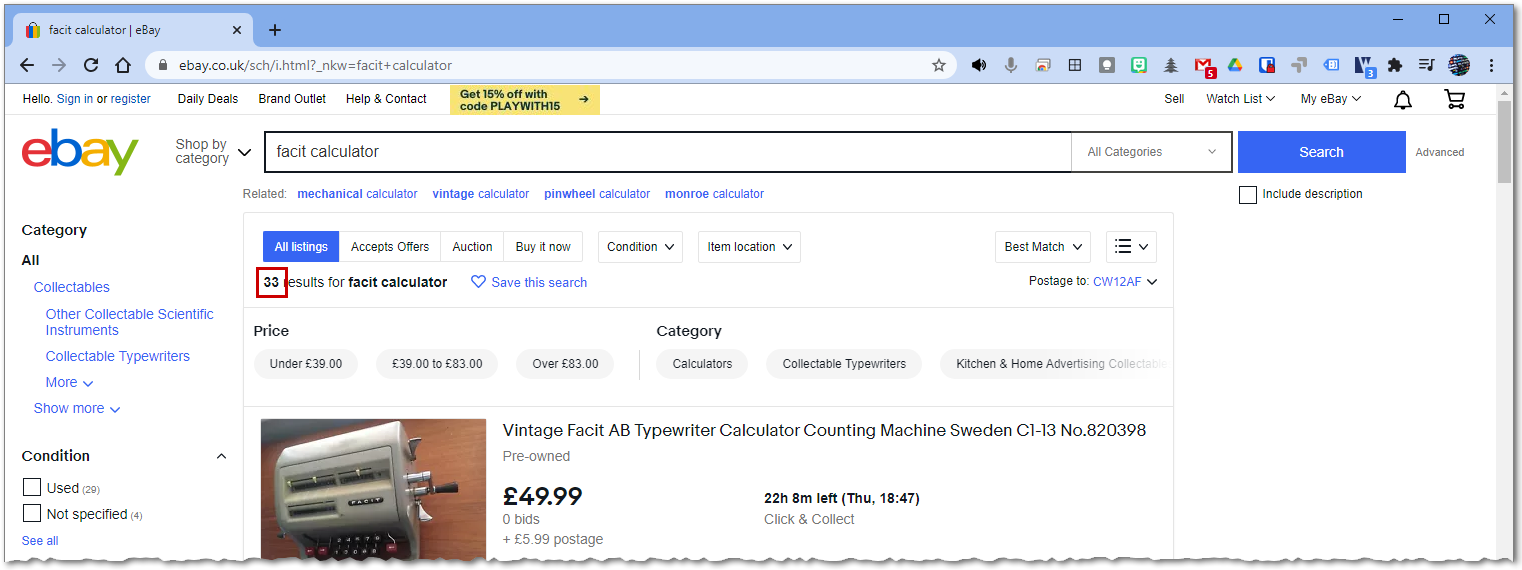

Choose a cool website to scrape

I'm going to scrape my favourite website, eBay, to find out how many items it finds for a particular search query. As an absolute minimum, eBay requires you to specify the search term in the url parameter

_nkwSo, let's say I want to find out how many Facit calculators are listed on Ebay at the moment. The URL would look like this...

https://www.ebay.co.uk/sch/i.html?_nkw=facit+calculator

Check it out and make a note of how many results you get. When I did it, I got 33.

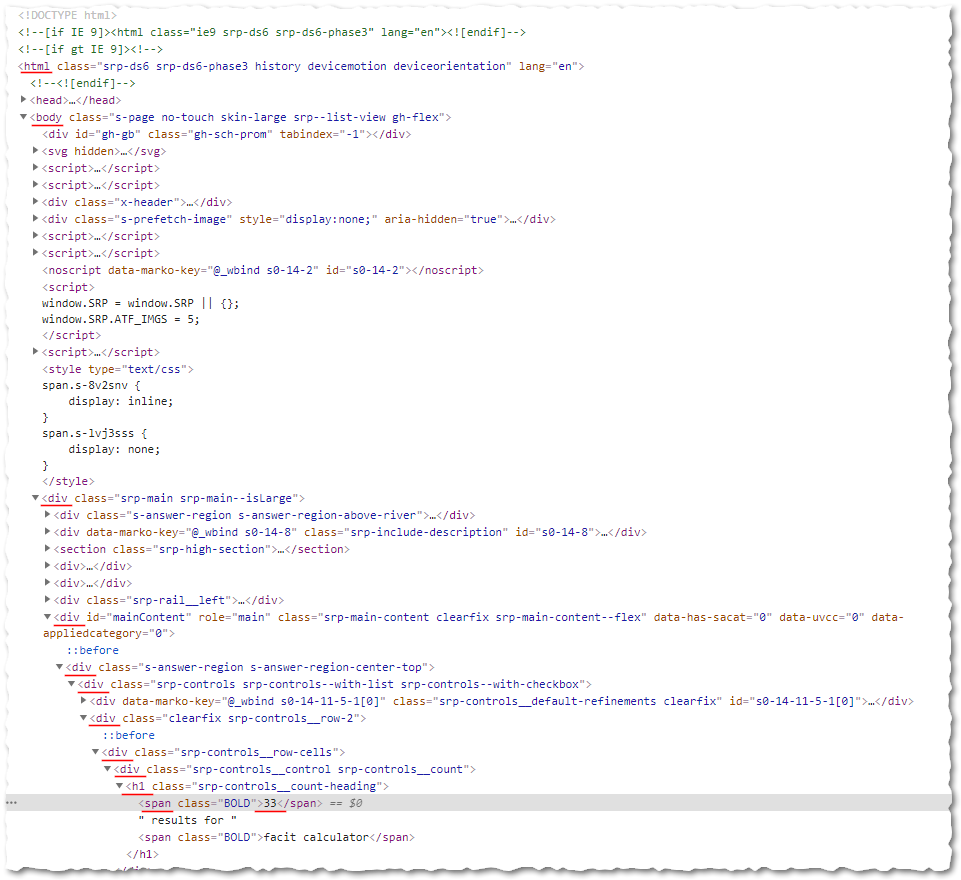

I've highlighted the result for you

5

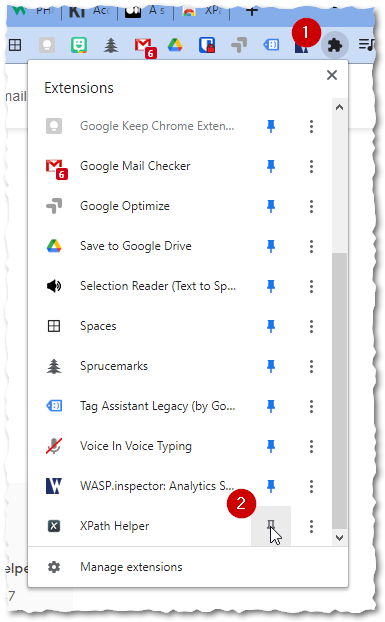

Use XPath Helper to find the path to the results

We need to find the "path" to this text in the document tree. You *could* view the source code and construct the path yourself but, for a big page like this, it's tough and error prone. There is another way - if you are using Google Chrome, then install an extension called XPath Helper. Make sure that the extension is active and refresh the page before you start (to give the extension permission to access the page).

Click the jigsaw and then the pin

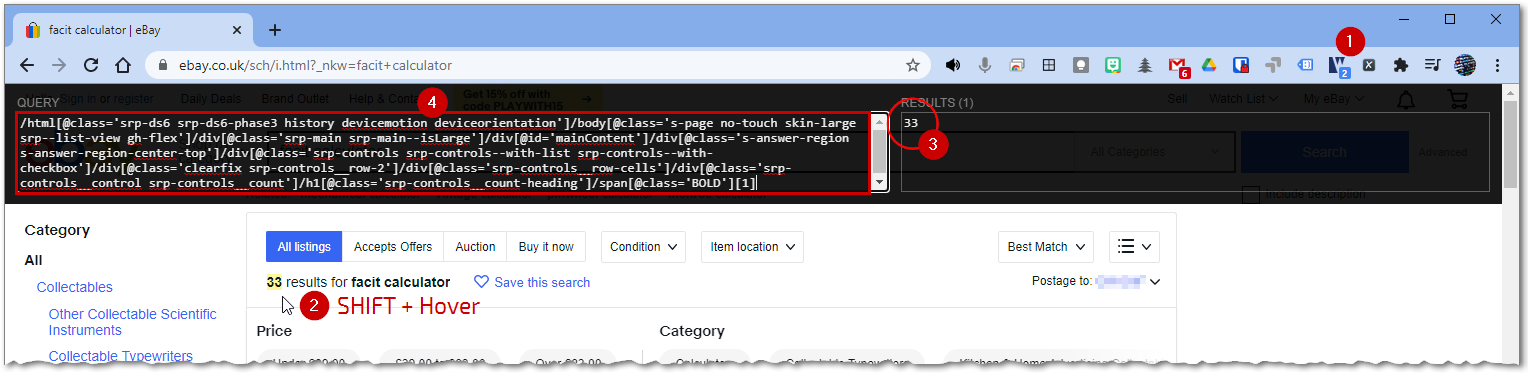

Now, when you click on the XPath Helper icon, a black panel should appear. Hold down the SHIFT key and hover over the number of results. You'll see a yellow highlight - as you move around, notice the 'path' in the left hand box which is the journey from the root "html" node to the part of the page you are hovering over. The right hand box shows you the content of that node, so you can check it's correct.

Follow the steps 1-4

When you have located the right node, let go of the SHIFT key and the path will remain fixed. Now, copy the path - we need to do a little editing of this before we put it into Python. You can express this path as an absolute route from the root to the desired node or you can choose a shorter root, starting at a unique node in the path. Normally, we would look for a "id" attribute in a node if we were going to start with that so that we would be sure that the path is unique. However, in this case, we can't because all of the nodes in our path are "classes" so we can't guarantee the path is unique. Moreover, if there are any other instances of these classes in the page, our path will break.

In this situation, the safest way is to strip the path back to it's skeletal form. We reduce this...

/html[@class='srp-ds6 srp-ds6-phase3 history devicemotion deviceorientation']/body[@class='s-page no-touch skin-large srp--list-view gh-flex']/div[@class='srp-main srp-main--isLarge']/div[@id='mainContent']/div[@class='s-answer-region s-answer-region-center-top']/div[@class='srp-controls srp-controls--with-list srp-controls--with-checkbox']/div[@class='clearfix srp-controls__row-2']/div[@class='srp-controls__row-cells']/div[@class='srp-controls__control srp-controls__count']/h1[@class='srp-controls__count-heading']/span[@class='BOLD'][1]

...to this...

/html/body/div/div/div/div/div/div/div/h1/span[1]

By removing all the class elements, we are guaranteeing that the path is unique. We then need to grab the content of the node (XPath Helper does not seem to include this) by adding

/text()/html/body/div/div/div/div/div/div/div/h1/span[1]/text()

You can actually see the path if you look at the HTML of the page. There is only one valid path to the last node but there are two

spanspan[1]

The one true path

6

Writing a simple Python program

So, lets get programming! This is a simple Python script which will request and download the webpage the analyse the path we provide to give us the result. NOTE: This will only work for a website that you do not need to log into to - we'll covert that scenario later 😄. Create the script using your favourite Python editor, save and run.

# First, we need the requests library to get the webpage

import requests

# The lxml library is used to convert html to an xml tree

from lxml import html

# Let's create a session object to handle the retrieval.

session = requests.Session()

# The most basic URL structure for Ebay.

url = 'https://www.ebay.co.uk/sch/i.html?_nkw=facit+calculator'

# Get the webpage. The page is returned as a text/html file.

page = session.get(url)

# Create an XML tree from the page text.

tree = html.fromstring(page.text)

# The XPath to the required data.

x = "/html/body/div/div/div/div/div/div/div/h1/span[1]/text()"

# Execute the xpath

results = tree.xpath(x)

# Print the result.

print(results)

If all has gone to plan, you should get the following output... (although I got 33 before 🤪)

Connected: True

['29']

>>>

XPath always returns it's results as a list so we get the item we want, in this case, the first one, by...

>>> print(results[0])

29

It really is up to you what you do with this data. You could get the script to alert you when new items are listed perhaps?

Read More...

🌐

www.w3schools.com

Last modified: October 5th, 2021